By Gerald L. Maatman, Jr. and Justin R. Donoho

Duane Morris Takeaways: On December 3, 2024, the Federal Trade Commission (FTC) issued an order in In Re Intellivision Technologies Corp., (FTC Dec. 3, 2024) prohibiting an AI software developer from making misrepresentations that its AI-powered facial recognition software was free from gender and racial bias, and two orders in In Re Mobilewalla, Inc. (FTC Dec. 3, 2024), and In RE Gravy Analytics, Inc. (FTC Dec. 3, 2024), requiring data brokers to improve their advertising technology (adtech) privacy and security practices. These three orders are significant in that they highlight that in 2024, the FTC has significantly increased its enforcement activities in the areas of AI and adtech.

Background

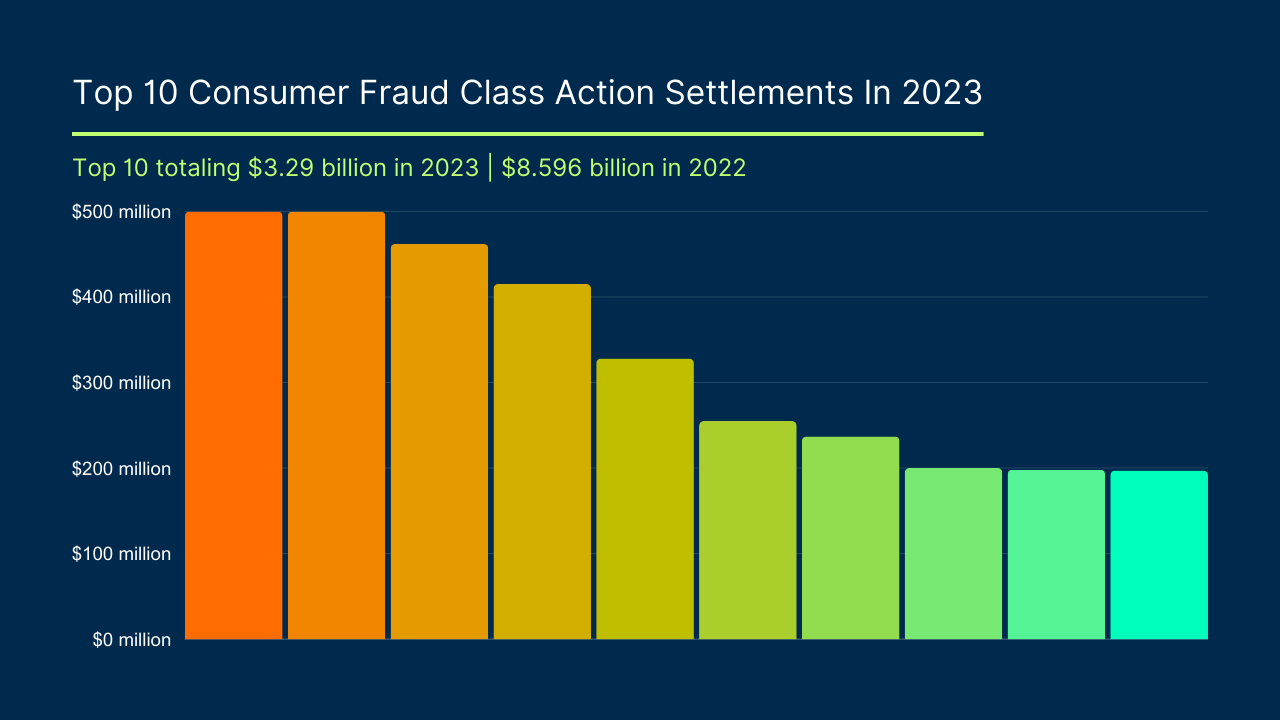

In 2024, the FTC brought and litigated at least 10 enforcement actions involving alleged deception about AI, alleged AI-powered fraud, and allegedly biased AI. See the FTC’s AI case webpage located here. This is a fivefold increase from the at least two AI-related actions brought by the FTC last year. See id. Just as private class actions involving AI are on the rise, so are the FTC’s AI-related enforcement actions.

This year the FTC also brought and litigated at least 21 enforcement actions categorized by the FTC as involving privacy and security. See the FTC’s privacy and security webpage located here. This is about twice the case activity by the FTC in privacy and data security cases compared with 2023. See id. Most of these new cases involve alleged unfair use of adtech, an area of recently increased litigation activity in private class actions, as well.

In short, this year the FTC officially achieved its “paradigm shift” of focusing enforcement activities on modern technologies and data privacy, as forecasted in 2022 by the FTC’s Director, Bureau of Consumer Protection, Samuel Levine, here.

All these complaints were brought by the FTC under the FTC Act, under which there is no private right of action.

The FTC’s December 3, 2024 Orders

In Intellivision, the FTC brought an enforcement action against a developer of AI-based facial recognition software embedded in home security products to enable consumers to gain access to their home security systems. According to the complaint, the developer described its facial recognition software publicly as being entirely free of any gender or racial bias as shown by rigorous testing when, in fact, testing by the U.S. Department of Commerce’s National Institute of Standards and Technology (NIST) showed that the software was not among the top 100 best performing algorithms tested by NIST in terms of error rates across different demographics, including region of birth and sex. (Compl. ¶ 11.) Moreover, according to the FTC, the developer did not possess any of its own testing to support its claims of lack of bias. Based on these allegations, the FTC brought misrepresentation claims under the FTC Act. The parties agreed to a consent order, in which the developer agreed to refrain from making any representations about the accuracy, efficacy, or lack of bias of its facial recognition technology, unless it could first substantiate such claims with reliable testing and documentation as set forth in the consent order. The consent order also requires the developer to communicate the order to any of its managers and affiliated companies in the next 20 years, to make timely compliance reports and notices, and to create and maintain various detailed records, including regarding the company’s accounting, personnel, consumer complaints, compliance, marketing, and testing.

In Mobilewalla and Gravy Analytics, the FTC brought enforcement actions against data brokers who allegedly obtained consumer location data from other data suppliers and mobile applications and sold access to this data for purposes of online advertising without consumers’ consent. According to the FTC’s complaints, the data brokers engaged in unfair collection, sale, use, and retention of sensitive location information, all in alleged violation of the FTC Act. The parties agreed to consent orders, in which the data brokers agreed to refrain from collecting, selling, using, and retaining sensitive location information; to establish a Sensitive Location Data Program, Supplier Assessment Program, and a comprehensive privacy program, as detailed in the orders; provide consumers clear and conspicuous notice; provide consumers a means to request data deletion; delete location data as set forth in the order; and perform compliance, recordkeeping, and other activities, as set forth in the order.

Implications For Companies

The FTC’s increased enforcement activities in the areas of adtech and AI serve as a cautionary tale for companies using adtech and AI.

As the FTC’s recent rulings and its 2024 dockets show, the FTC is increasingly using the FTC Act as a sword against alleged unfair use of adtech and AI. Moreover, although the December 3 orders do not expressly impose any monetary penalties, the injunctive relief they impose may be costly and, in other FTC consent orders, harsher penalties have included express penalties of millions of dollars and, further, algorithmic disgorgement. As adtech and AI continue to proliferate, organizations should consider in light of the FTC’s increased enforcement activities in these areas—and in light of the plaintiffs’ class action bar’s and EEOC’s increased activities in these areas, as well, as we blogged about here, here, here, here, and here—whether to modify their website terms of use, data privacy policies, and all other notices to the organizations’ website visitors and customers to describe the organization’s use of AI and adtech in additional detail. Doing so could deter or help defend a future enforcement action or class action similar to the many that are being filed today, alleging omission of such additional details, and seeking a wide range of injunctive and monetary relief.